Yet another Fluentd deployment for Kubernetes

Date June 27th, 2018 Author Vitaly Agapov

It is always mandatory to make logs from the services available for developers and other involved persons. And this access is expected to be not too complicated, without kubectl magic and dashboards (when we are talking about Kubernetes). It is quite easy to start exporting all logs from the Kubernetes cluster to Elasticsearch. The most straightforward way is:

It is always mandatory to make logs from the services available for developers and other involved persons. And this access is expected to be not too complicated, without kubectl magic and dashboards (when we are talking about Kubernetes). It is quite easy to start exporting all logs from the Kubernetes cluster to Elasticsearch. The most straightforward way is:

git clone https://github.com/fluent/fluentd-kubernetes-daemonset

cd fluentd-kubernetes-daemonset

sed -i "s/elasticsearch-logging/MY-ES-HOST/" fluentd-daemonset-elasticsearch-rbac.yaml

kubectl apply -f fluentd-daemonset-elasticsearch-rbac.yaml

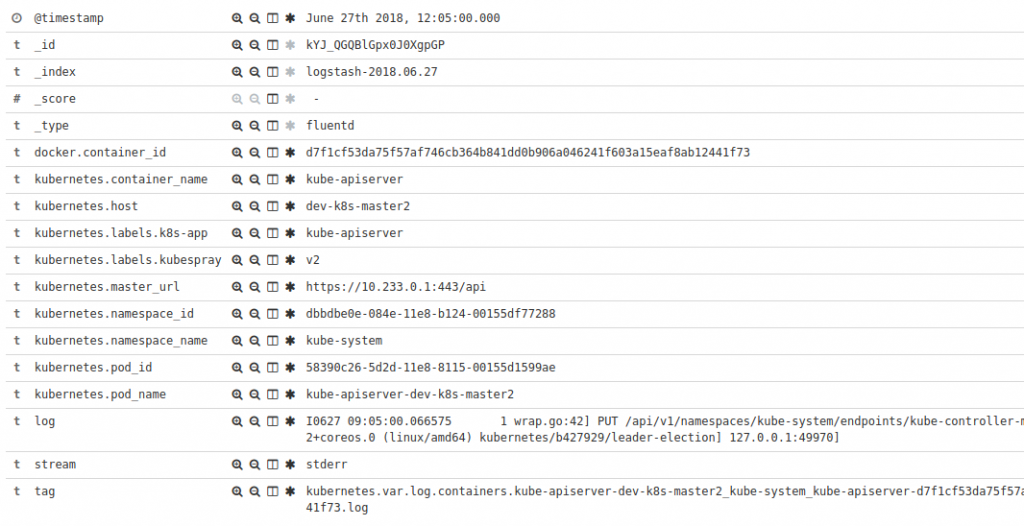

This works as expected but the volumes of data being exported could be larger than we want. First, the entries contain useless (for most of us) fields like container_id, pod_id, namespace_id etc. Second, there are tons of access logs from apiservers.

In order to change the fluentd behaviour we need to modify the config file. The first modification includes the new filter with record_transformer plugin. It will get rid of useless fields and save large amount of disk space for keeping the Elastic indexes.

01.<filter kubernetes.**>02. @type record_transformer03. enable_ruby04. # Remove unwanted metadata keys05. <record>06. for_remove ${record["docker"].delete("container_id");record["kubernetes"].delete("annotations"); record["kubernetes"]["labels"].delete("pod-template-hash"); record["kubernetes"].delete("master_url"); record["kubernetes"].delete("pod_id"); record["kubernetes"].delete("namespace_id");}07. </record>08. remove_keys for_remove09.</filter>The second simple modification is to add the excluding rule to the source to get rid of kube-apiserver logs:

01.<source>02. @type tail03. @id in_tail_container_logs04. path /var/log/containers/*.log05. exclude_path ["/var/log/containers/kube-apiserver*"]06. pos_file /var/log/fluentd-containers.log.pos07. tag kubernetes.*08. read_from_head true09. format json10. time_format %Y-%m-%dT%H:%M:%S.%NZ11.</source>And the last step is creating the ConfigMap and modifying the DaemonSet to use this ConfigMap as volume mount.

Update: Also it worth adding a new env variable to the DaemonSet container spec: FLUENT_UID=0. Otherwise an error like unexpected error error_class=Errno::EACCES error=<Errno::EACCES: Permission denied @ rb_sysopen – /var/log/fluentd-containers.log.pos> can occur.

| --- | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: fluentd-config | |

| namespace: kube-system | |

| data: | |

| kubernetes.conf: |- | |

| <match fluent.**> | |

| @type null | |

| </match> | |

| <source> | |

| @type tail | |

| @id in_tail_container_logs | |

| path /var/log/containers/*.log | |

| exclude_path ["/var/log/containers/kube-apiserver*"] | |

| pos_file /var/log/fluentd-containers.log.pos | |

| tag kubernetes.* | |

| read_from_head true | |

| format json | |

| time_format %Y-%m-%dT%H:%M:%S.%NZ | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_minion | |

| path /var/log/salt/minion | |

| pos_file /var/log/fluentd-salt.pos | |

| tag salt | |

| format /^(?<time>[^ ]* [^ ,]*)[^\[]*\[[^\]]*\]\[(?<severity>[^ \]]*) *\] (?<message>.*)$/ | |

| time_format %Y-%m-%d %H:%M:%S | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_startupscript | |

| path /var/log/startupscript.log | |

| pos_file /var/log/fluentd-startupscript.log.pos | |

| tag startupscript | |

| format syslog | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_docker | |

| path /var/log/docker.log | |

| pos_file /var/log/fluentd-docker.log.pos | |

| tag docker | |

| format /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/ | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_etcd | |

| path /var/log/etcd.log | |

| pos_file /var/log/fluentd-etcd.log.pos | |

| tag etcd | |

| format none | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_kubelet | |

| multiline_flush_interval 5s | |

| path /var/log/kubelet.log | |

| pos_file /var/log/fluentd-kubelet.log.pos | |

| tag kubelet | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_kube_proxy | |

| multiline_flush_interval 5s | |

| path /var/log/kube-proxy.log | |

| pos_file /var/log/fluentd-kube-proxy.log.pos | |

| tag kube-proxy | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_kube_apiserver | |

| multiline_flush_interval 5s | |

| path /var/log/kube-apiserver.log | |

| pos_file /var/log/fluentd-kube-apiserver.log.pos | |

| tag kube-apiserver | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_kube_controller_manager | |

| multiline_flush_interval 5s | |

| path /var/log/kube-controller-manager.log | |

| pos_file /var/log/fluentd-kube-controller-manager.log.pos | |

| tag kube-controller-manager | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_kube_scheduler | |

| multiline_flush_interval 5s | |

| path /var/log/kube-scheduler.log | |

| pos_file /var/log/fluentd-kube-scheduler.log.pos | |

| tag kube-scheduler | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_rescheduler | |

| multiline_flush_interval 5s | |

| path /var/log/rescheduler.log | |

| pos_file /var/log/fluentd-rescheduler.log.pos | |

| tag rescheduler | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_glbc | |

| multiline_flush_interval 5s | |

| path /var/log/glbc.log | |

| pos_file /var/log/fluentd-glbc.log.pos | |

| tag glbc | |

| format kubernetes | |

| </source> | |

| <source> | |

| @type tail | |

| @id in_tail_cluster_autoscaler | |

| multiline_flush_interval 5s | |

| path /var/log/cluster-autoscaler.log | |

| pos_file /var/log/fluentd-cluster-autoscaler.log.pos | |

| tag cluster-autoscaler | |

| format kubernetes | |

| </source> | |

| # Example: | |

| # 2017-02-09T00:15:57.992775796Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" ip="104.132.1.72" method="GET" user="kubecfg" as="<self>" asgroups="<lookup>" namespace="default" uri="/api/v1/namespaces/default/pods" | |

| # 2017-02-09T00:15:57.993528822Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" response="200" | |

| <source> | |

| @type tail | |

| @id in_tail_kube_apiserver_audit | |

| multiline_flush_interval 5s | |

| path /var/log/kubernetes/kube-apiserver-audit.log | |

| pos_file /var/log/kube-apiserver-audit.log.pos | |

| tag kube-apiserver-audit | |

| format multiline | |

| format_firstline /^\S+\s+AUDIT:/ | |

| # Fields must be explicitly captured by name to be parsed into the record. | |

| # Fields may not always be present, and order may change, so this just looks | |

| # for a list of key="\"quoted\" value" pairs separated by spaces. | |

| # Unknown fields are ignored. | |

| # Note: We can't separate query/response lines as format1/format2 because | |

| # they don't always come one after the other for a given query. | |

| format1 /^(?<time>\S+) AUDIT:(?: (?:id="(?<id>(?:[^"\\]|\\.)*)"|ip="(?<ip>(?:[^"\\]|\\.)*)"|method="(?<method>(?:[^"\\]|\\.)*)"|user="(?<user>(?:[^"\\]|\\.)*)"|groups="(?<groups>(?:[^"\\]|\\.)*)"|as="(?<as>(?:[^"\\]|\\.)*)"|asgroups="(?<asgroups>(?:[^"\\]|\\.)*)"|namespace="(?<namespace>(?:[^"\\]|\\.)*)"|uri="(?<uri>(?:[^"\\]|\\.)*)"|response="(?<response>(?:[^"\\]|\\.)*)"|\w+="(?:[^"\\]|\\.)*"))*/ | |

| time_format %FT%T.%L%Z | |

| </source> | |

| <filter kubernetes.**> | |

| @type kubernetes_metadata | |

| @id filter_kube_metadata | |

| </filter> | |

| <filter kubernetes.**> | |

| @type record_transformer | |

| enable_ruby | |

| # Remove unwanted metadata keys | |

| <record> | |

| for_remove ${record["docker"].delete("container_id");record["kubernetes"].delete("annotations"); record["kubernetes"]["labels"].delete("pod-template-hash"); record["kubernetes"].delete("master_url"); record["kubernetes"].delete("pod_id"); record["kubernetes"].delete("namespace_id");} | |

| </record> | |

| remove_keys for_remove | |

| </filter> |

| --- | |

| apiVersion: v1 | |

| kind: ServiceAccount | |

| metadata: | |

| name: fluentd | |

| namespace: kube-system | |

| --- | |

| apiVersion: rbac.authorization.k8s.io/v1beta1 | |

| kind: ClusterRole | |

| metadata: | |

| name: fluentd | |

| namespace: kube-system | |

| rules: | |

| - apiGroups: | |

| - "" | |

| resources: | |

| - pods | |

| - namespaces | |

| verbs: | |

| - get | |

| - list | |

| - watch | |

| --- | |

| kind: ClusterRoleBinding | |

| apiVersion: rbac.authorization.k8s.io/v1beta1 | |

| metadata: | |

| name: fluentd | |

| roleRef: | |

| kind: ClusterRole | |

| name: fluentd | |

| apiGroup: rbac.authorization.k8s.io | |

| subjects: | |

| - kind: ServiceAccount | |

| name: fluentd | |

| namespace: kube-system | |

| --- | |

| apiVersion: extensions/v1beta1 | |

| kind: DaemonSet | |

| metadata: | |

| name: fluentd | |

| namespace: kube-system | |

| labels: | |

| k8s-app: fluentd-logging | |

| version: v1 | |

| kubernetes.io/cluster-service: "true" | |

| spec: | |

| template: | |

| metadata: | |

| labels: | |

| k8s-app: fluentd-logging | |

| version: v1 | |

| kubernetes.io/cluster-service: "true" | |

| spec: | |

| serviceAccount: fluentd | |

| serviceAccountName: fluentd | |

| tolerations: | |

| - key: node-role.kubernetes.io/master | |

| effect: NoSchedule | |

| containers: | |

| - name: fluentd | |

| image: fluent/fluentd-kubernetes-daemonset:v1.3-debian-elasticsearch | |

| env: | |

| - name: FLUENT_ELASTICSEARCH_HOST | |

| value: "elasticsearch-logging" | |

| - name: FLUENT_ELASTICSEARCH_PORT | |

| value: "9200" | |

| - name: FLUENT_ELASTICSEARCH_SCHEME | |

| value: "http" | |

| # X-Pack Authentication | |

| # ===================== | |

| - name: FLUENT_ELASTICSEARCH_USER | |

| value: "elastic" | |

| - name: FLUENT_ELASTICSEARCH_PASSWORD | |

| value: "changeme" | |

| - name: FLUENT_UID | |

| value: "0" | |

| resources: | |

| limits: | |

| memory: 200Mi | |

| requests: | |

| cpu: 100m | |

| memory: 200Mi | |

| volumeMounts: | |

| - name: varlog | |

| mountPath: /var/log | |

| - name: varlibdockercontainers | |

| mountPath: /var/lib/docker/containers | |

| readOnly: true | |

| - name: config | |

| mountPath: /fluentd/etc/kubernetes.conf | |

| subPath: kubernetes.conf | |

| terminationGracePeriodSeconds: 30 | |

| volumes: | |

| - name: varlog | |

| hostPath: | |

| path: /var/log | |

| - name: varlibdockercontainers | |

| hostPath: | |

| path: /var/lib/docker/containers | |

| - name: config | |

| configMap: | |

| name: fluentd-config |

Tags: Docker, Elasticsearch, Fluentd, Kubernetes

Category:

Kubernetes |

No comments »